The Misunderstood Nature of AI Hallucinations

Let’s kick things off with this: AI isn’t lying to you. When you ask ChatGPT (or any other modal) a question, and get a plausible yet incorrect answer—what’s often referred to as an ‘AI hallucination’—it’s not being deceitful; it’s simply a reflection of how it’s been trained.

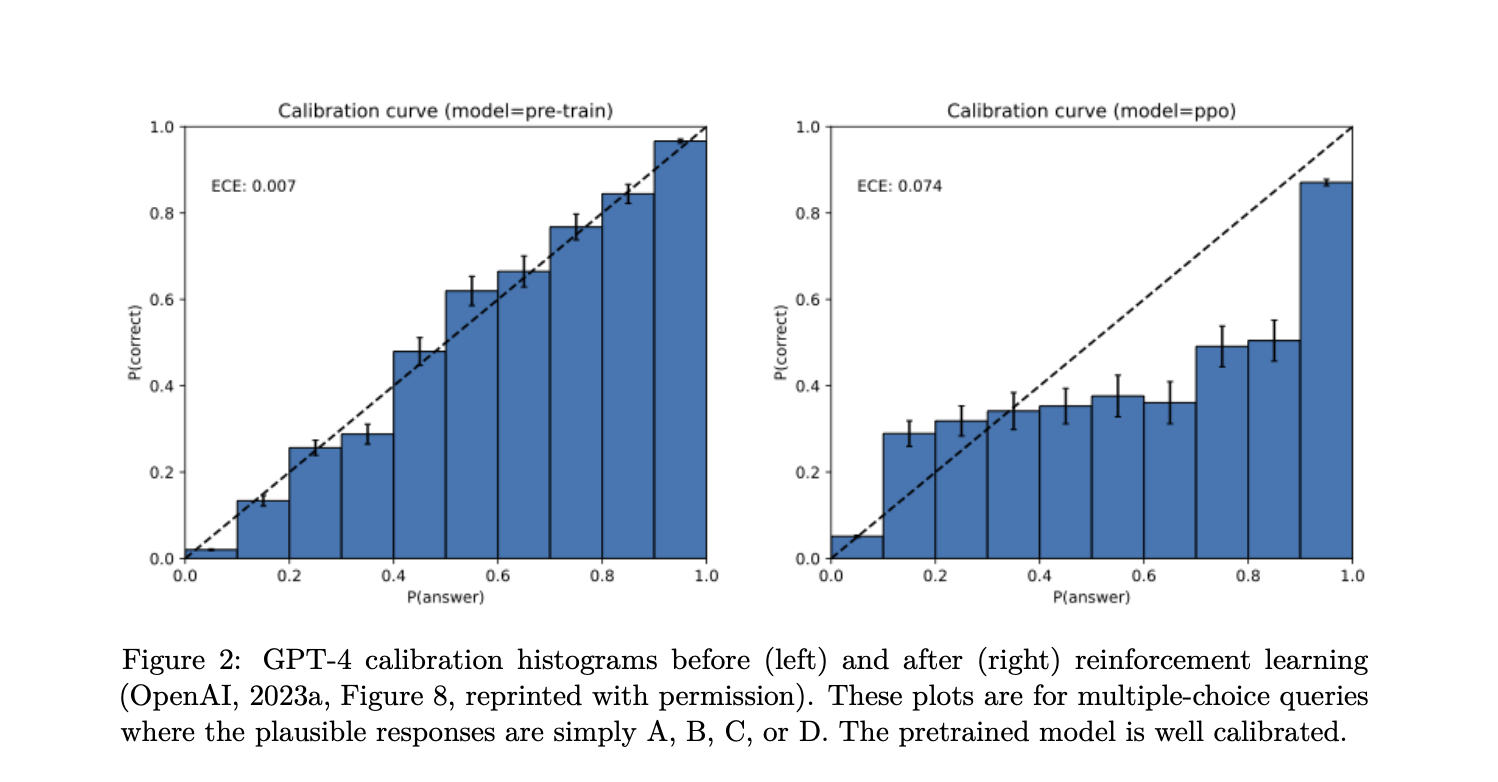

I came across some fascinating research from OpenAI that sheds light on this phenomenon and reveals that these hallucinations are, in fact, more of a training artifact than an inherent flaw. This has sparked a wave of optimism in me about how we can address and ultimately solve these issues.

You might be wondering what exactly qualifies as an AI hallucination.

Simply put, these are statements that sound credible but are, in reality, false. Imagine asking a model for the title of Adam Tauman Kalai’s PhD dissertation and receiving three different titles—none of them correct. Or asking for his birthday and getting three wrong dates. How could this happen?

Well, as it turns out, models learn through next-word prediction without having explicit truth labels to guide them. They’re not lying; they’re just trying to guess based on the patterns they’ve picked up from their training data.

The work by OpenAI digs deeper into why this happens and why we shouldn’t dismiss AI because of it. Current evaluation systems reward models for guessing rather than admitting they don’t know something. Think about it—if you’ve ever played a trivia game, you know how tempting it can be to throw out an answer, even if you’re not entirely sure. That’s what’s happening here; the AI is incentivized to give an answer, even if it’s incorrect.

The Numbers Don’t Lie: Analyzing Performance

Let’s talk numbers for a moment because they tell a compelling story. A comparison between two AI models shows the stark impact of how we evaluate performance. The gpt-5-thinking-mini model had a 52% abstention rate, meaning it often chose to abstain from answering, with a 22% accuracy and 26% error. In contrast, the o4-mini model exhibited a mere 1% abstention rate, but it faced a staggering 75% error. This paints a clear picture: when AI is pressured to guess rather than acknowledge uncertainty, the errors multiply. It’s like labeling pet photos by their birthdays—an almost random guess that can’t possibly lead to accurate learning.

What does this mean for creatives?

Rather than viewing AI as broken, we can understand that these hallucinations are an engineering problem waiting for a solution. And here’s the exciting part: we can develop better evaluation metrics that reward uncertainty over confident but incorrect answers. Imagine a world where AI models are encouraged to say, “I’m not sure,” rather than throwing out a guess that could mislead us. This shift could significantly improve the reliability of AI outputs.

A Bright Future: Embracing the Creative Potential of AI

As we navigate this evolving landscape, it’s essential to embrace an optimistic outlook on AI’s future. The tools at our disposal have incredible potential to help creatives unlock new possibilities. Rather than letting fear dictate our perception of AI, let’s focus on understanding its capabilities and limitations. With the right mindset, we can engage in bold conversations about how to leverage these technologies for our creative endeavors.

I encourage you to think about how you can incorporate AI into your own processes without fear. Challenge yourself to explore these tools, and don’t be quick to dismiss them when faced with inaccuracies. Instead of seeing a hallucination as a flaw, consider it a prompt for further inquiry. What can you learn from it? How can it guide you toward a deeper understanding of your subject?