I’ve probably made a thousand songs using Udio. That’s not exaggeration. Most of them were created for background use—music for short videos, mood pieces, or transitions. Some made it into finished edits, most didn’t. They weren’t bad; they just didn’t stick.

That’s what AI music is right now: serviceable. Good enough to sit quietly behind a voiceover, to fill a mood, to make a scene feel complete. But it’s not good enough to make you feel something after it ends. Lets just say I'm not listening to any of these in my car!

That distinction has been on my mind a lot lately. There’s music that works, and there’s music that stays. AI can give you the first one on demand. The second still belongs to us (humans).

For anyone creating video content, tools like Udio and Suno are nothing short of remarkable.

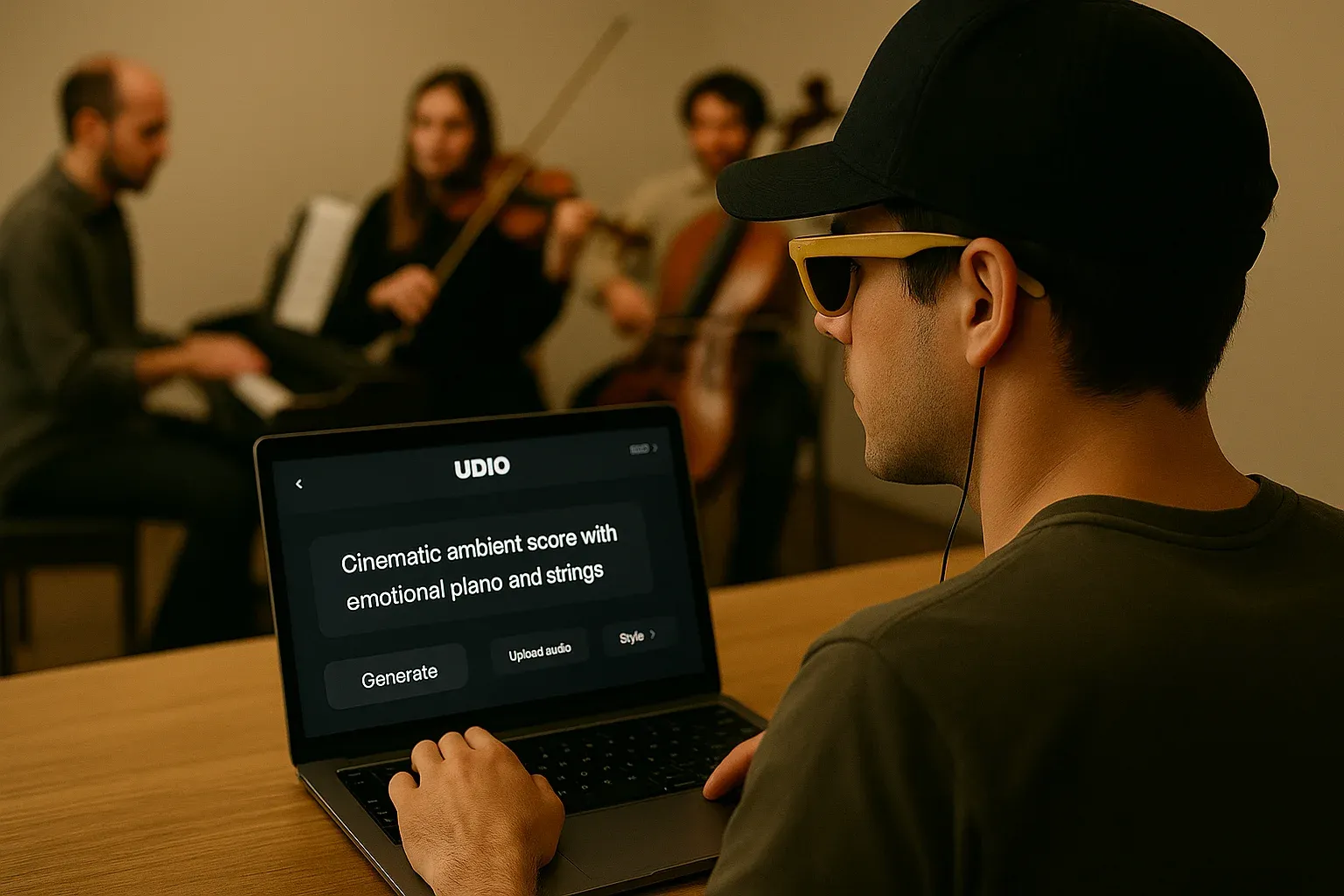

They’re fast, accessible, and versatile. You type something like “cinematic ambient score with emotional classical piano and strings,” and half a minute later, you’ve got something that sounds pretty decent to good. I’ll often generate five or six tracks for a single project, test them, and pick the one that best fits the scene or supports the vibe I'm trying to create.

That’s really AI music’s superpower: it disappears. It’s invisible, functional, and endlessly replaceable. But that utility also exposes its limitation. The best AI tracks feel like emotional paint—smooth, consistent, and useful, but covering everything without truly sinking in.

A few weeks ago, I listened to Taylor Swift’s The Life of a Show Girl. I’m not a lifelong Swiftie, but I’ve been humming Fate of Ophelia, Elizabeth Taylor, and Opalite for days. That’s the difference. Those songs stay. They build little corners in your mind. They loop back in at random moments. You find yourself singing them under your breath while making coffee.

AI can’t do that—not yet. You can feed it the cleverest prompts imaginable, and it’ll generate something that sounds exactly like a song. It’ll have melody, rhythm, structure, all the ingredients. But it won’t have stakes. It has nothing to lose and nothing to confess. It can simulate tone but not vulnerability, structure but not struggle.

When you hear a song that really lands, it’s not just the melody or the production—it’s the memory. You feel the fingerprints. You sense the restraint, the odd decisions, the emotional arithmetic that makes a chord change feel inevitable. AI can’t recreate that because it has no history. It’s not trying to express something—it’s trying to approximate expression.

And here’s where the contradiction gets interesting.

AI music isn’t perfect, and we all know that. Yet when we talk about its limitations, we frame them as flaws to be fixed. “It’s not quite there yet.” “The mix is off.” “The vocals sound a little fake.” With traditional music, those same imperfections are what we fall in love with. We celebrate them. The crack in a voice. The missed snare hit that somehow feels right. The way a note wavers just enough to sound human.

Why the double standard?

I catch myself doing it constantly—endlessly regenerating songs in Udio, chasing the “perfect” version of something that’s not supposed to be perfect in the first place. It’s ironic: the very thing that makes human music resonate—its rough edges, its imperfection—is what I keep trying to eliminate when I use AI.

It makes me wonder if I’m setting myself up to fail. Maybe the goal shouldn’t be to get AI music to sound flawless, but to get it to sound alive. The mistake might not be in the tool but in how I'm using it.

That’s what separates Taylor Swift’s songs—or any lasting piece of music—from what AI can do. They live in their imperfections. They breathe there. They make you feel like you’re witnessing something unfiltered. Perfection, on the other hand, is sterile. It doesn’t invite you in—it just performs for you.

So maybe AI music’s evolution isn’t about getting more precise. Maybe it’s about learning to let go. To stop trying to erase the digital fingerprints and start letting a little weirdness in. Maybe that’s where emotion hides—right in the glitches we’re still trying to sand out.

For creators like me, AI remains an incredible collaborator. It’s fast, flexible, and affordable. It’s perfect for sound design, for mood, for texture. It’s a new kind of creative brush. But it’s not the songwriter. It’s not the confession. It’s not the spark that makes silence feel unbearable.

AI can already make beautiful sound. But sound isn’t song. It can simulate emotion, but it can’t yet provoke it. And maybe it shouldn’t try. Because what we love about human music—the messy, aching, imperfect parts—isn’t what we want fixed. It’s what we want felt.

Until AI learns to fail beautifully, it’ll keep sounding right but feeling wrong.